How to Use a Proxy With Axios and Node.js

Vilius Dumcius

Last updated -

In This Article

Axios is one of the most commonly used Node.js libraries for web scraping. It enables developers to download the contents of websites, which can later be parsed with a library like Cheerio .

By default, Axios exposes your IP address when you connect to a website. This can lead to repercussions such as an IP ban. To avoid that, web scrapers use proxies—servers that act as middlemen between the client and the server. They help you hide your web scraping activities and protect your IP address.

This article will show you how to use proxies together with Axios when scraping with Node.js.

Why Should You Use Proxies?

When connecting to a server, you share the IP address from which your request comes. If this is a one-off request, it doesn’t matter. But if you plan to request many pages to scrape the information from the website, your IP address might be flagged by a detection system or administrator and blacklisted. This means that you won’t be able to access the website until you change your IP address.

Proxies act as middlemen and forward your requests to the server. The server sees the IP address of the proxy as the origin of the request, and your real IP address is known only to the proxy. Proxies enable you to scrape large amounts of data and make many more requests than you could with a single IP address. The best of them provide a pool of IP addresses through a single, authorized endpoint that enables you to rotate IPs on request.

How to Use Proxies in Node JS

This tutorial will show you how to use proxies in Node.js via Axios.

Setup

First, make sure you have Node.js installed on your device. If you don’t, you can use the official instructions to download and install it.

After that, install Axios and Cheerio with the following terminal command. Axios will be used for making web requests, while Cheerio will be used for the web scraping script example.

npm i axios cheerioThen, create a new folder called axios_proxy and move inside that folder. Run the npm init command to create a new Node.js project. Finally, create a file called index.js and open it in your favorite code editor.

Basic HTTP Request With Axios

Here’s how a basic HTTP request made with Axios looks.

const axios = require('axios')

const cheerio = require('cheerio');

axios.get('https://quotes.toscrape.com/')

.then((r) => {

console.log(r.data)

})All the function above does is request the content of a web page (in this case, it’s Quotes to Scrape ) and print it out.

If you use a library like Cheerio, you can parse the request to extract the necessary information.

const axios = require('axios')

const cheerio = require('cheerio');

axios.get('https://quotes.toscrape.com/')

.then((r) => {

const $ = cheerio.load(r.data);

const quote_blocks = $('.quote')

quotes = quote_blocks.map((_, quote_block) => {

text = $(quote_block).find('.text').text();

author = $(quote_block).find('.author').text();

return { 'text': text, 'author': author }

}).toArray();

console.log(quotes)

})In the case of Quotes to Scrape, the website is made for web scraping. But a website like Amazon will make you fill out captchas or log in if it detects that a lot of requests have been made from the same address. For this reason, it’s useful to add a proxy to your Axios requests.

Using a Proxy with Axios

To use a proxy server with Axios, you need to create a new variable that holds the value for the protocol, IP address, and port of the proxy that you want to connect to.

Here’s an example:

proxy = {

protocol: 'http',

host: '176.193.113.206',

port: 8989

}After that, you can use the proxy as an additional argument in the axios.get() request.

axios.get('https://quotes.toscrape.com/', {proxy: proxy})Requests will now be funneled through the proxy that you provided.

How to Find a Proxy?

Of course, there’s a catch. If you’re new to web scraping, you probably don’t have access to a proxy.

There are two ways to find a proxy to connect to. You can either scour the internet for lists of free proxy servers or pay for access to a professional proxy server.

In the first case, the proxy servers that you will find will most likely be slow, unsafe, unreliable, and also will provide you with just one IP address. These issues can be addressed, but it takes quite a bit of time and expertise.

In the second case, the proxy service provider will provide you with a secure endpoint to connect to. It will also provide proxy rotation by default. This means that it can change your IP address on every request, masking both your IP address and the fact that there’s any scraping being done on the page at all!

In addition, since there is a great amount of competition between proxy services, good, reliable services can be gotten for a rather small price—around 5 dollars per GB.

In this article, you will learn how to work with both types of proxies. So if you want to strike out on your own, that’s all right.

But if you are looking for a reliable proxy provider that can provide hard-to-detect proxies that are ethically sourced from real devices all around the world, take a look at IPRoyal residential proxies . They are easy to set up and use, and the next section will show an example on how you can use them with Axios.

Using an Authenticated Proxy

Proxies provided by professional services usually require authentication. In addition to all the usual values used to define a proxy, you need to provide a username and password to prove that you have paid for their services.

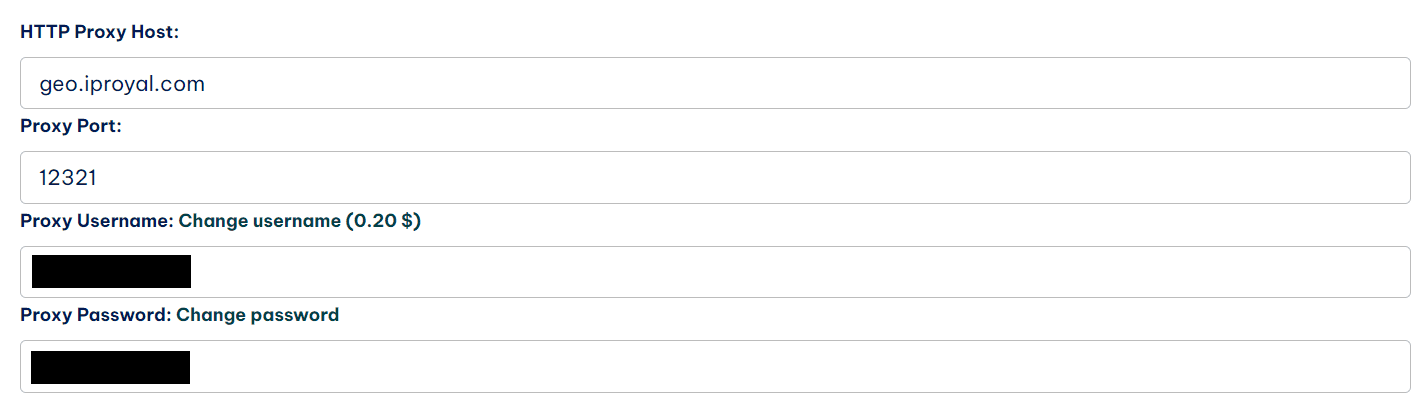

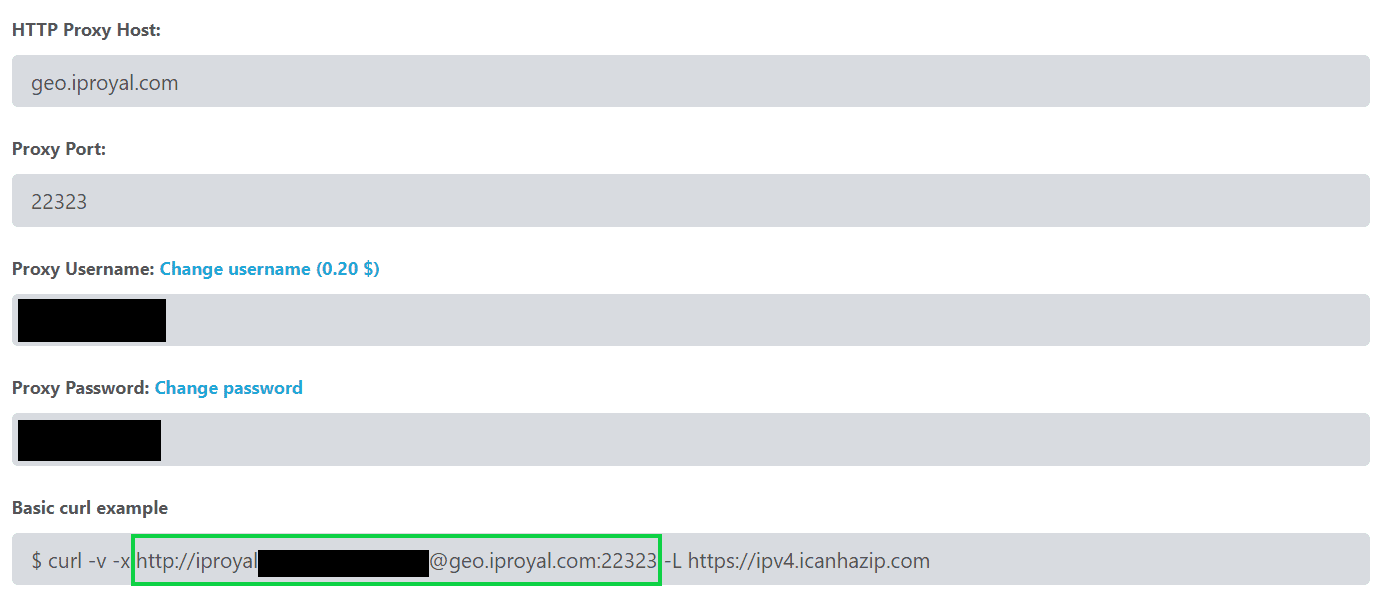

If you buy proxy services, the provider should supply you with all the available information: the host, port, username, and password you need to connect to the proxy.

For example, if you use IPRoyal residential proxies , you can find the necessary information in your dashboard.

Now, you can put all the information provided into a proxy variable. Copy the code below and fill out the host, port, username, and password fields with the information that your provider has supplied.

proxy = {

protocol: 'http',

host: 'geo.iproyal.com',

port: 12321,

auth: {

username: 'cool username',

password: 'cool password'

}

} After that, you can use the proxy as an argument in your axios.get() calls.

axios.get('https://quotes.toscrape.com/')

.then((r) => {

...

})Here’s an example of how a small web scraping script using an authenticated proxy and Axios might look:

const axios = require('axios')

const cheerio = require('cheerio');

proxy = {

protocol: 'http',

host: 'geo.iproyal.com',

port: 12321,

auth: {

username: 'cool username',

password: 'cool password'

}

}

axios.get('https://quotes.toscrape.com/', {proxy: proxy})

.then((r) => {

const $ = cheerio.load(r.data);

const quote_blocks = $('.quote')

quotes = quote_blocks.map((_, quote_block) => {

text = $(quote_block).find('.text').text();

author = $(quote_block).find('.author').text();

return { 'text': text, 'author': author }

}).toArray();

console.log(quotes)

})Every request made through the authenticated proxy will go through a different IP address, which will help to avoid detection in situations when you need to scrape hundreds or thousands of pages.

Setting a Proxy via Environment Variables

Storing sensitive information—such as the username and password you use for a proxy—in code is not very secure. If you accidentally share the file with another person or put it on a public GitHub repository, the credentials will be exposed.

To fix that, this information is usually stored in environment variables—user-defined that are accessible to programs running on a computer.

Using the terminal, you can define HTTP_PROXY and HTTPS_PROXY environment variables that include the link to your proxy, which includes host, port, and (optionally) authentication details.

If you’re using IPRoyal residential proxies, this link is accessible in your dashboard.

Copy the link and set it as an environment variable for both HTTP and HTTPS using the following commands if you’re using Windows:

set HTTP_PROXY=http://username:password@host:port

set HTTPS_PROXY=http://username:password@host:portIf you’re using Linux or MacOS, you need to use the export command instead of set:

export HTTP_PROXY=http://username:password@host:port

export HTTPS_PROXY=http://username:password@host:portIf you run your Axios web scraping script using this terminal, it will use the defined proxy by default. Axios automatically looks for HTTP_PROXY and HTTPS_PROXY environment variables and uses those for proxies if it finds any.

Rotating Proxies

If you have a bunch of proxy servers that you can use and you don’t use a professional proxy service that provides proxy rotation by default, it’s possible to stitch together a working solution that picks and tries a random proxy from a list of possible options.

First, instead of creating one proxy variable, create an array with multiple proxies.

proxies = [

{

protocol: 'http',

host: '128.172.183.18',

port: 8000

},

{

protocol: 'http',

host: '18.4.13.6',

port: 8080

},

{

protocol: 'http',

host: '65.108.34.224',

port: 8888

}

]Then, create a function that chooses one of the proxies at random.

function get_random_proxy(proxies) {

return proxies[Math.floor((Math.random() * proxies.length))];

}Now you can call it on the proxies array to pick a random proxy to go through every time you want to make a request.

axios.get('https://quotes.toscrape.com/', { proxy: get_random_proxy(proxies) })

.then((r) => { …Be careful though: this solution doesn’t account for the fact that proxy servers might break or shut down. So if you’re using proxy servers that are unreliable (can fail to return the requested information), you will also need to supply a retry mechanism that detects proxy failure.

Conclusion

Using proxy services is a great way to enable large-scale web scraping, since it lets you hide web scraping activity from website administrators. If you’re using Axios, it’s quite easy to set up both unauthenticated and authenticated proxies for your web scraping projects.

But sometimes, a proxy is not enough to look natural. In these cases, you can use a browser automation tool like Puppeteer to mimic a real user visiting the site. To learn more about this tool, check out our extensive guide on Puppeteer .

FAQ

AxiosError: connect ETIMEDOUT

This error means that Axios timed out while trying to connect to a remote server. Depending on the server it failed to connect to (which is listed in the error), it means that either the page you’re trying to connect to or the proxy is down. To make sure that proxies don’t shut down during the scraping process, it is important to either use a reliable proxy provider or write code for rotating proxies and retrying with a different proxy.

AxiosError: Request failed with status code 404

This error means that Axios was able to connect to a server of your choosing but there was no resource to serve because the URL you provided to Axios was incorrect (didn’t correspond to any web page on the server).

AxiosError: Request failed with status code 407

This error code frequently appears when you try to connect to an authenticated proxy without putting your credentials in the request. To solve it, be sure you add an auth field to your proxy variable that contains a dictionary hosting your username and password.

It should like this:

proxy = {

protocol: 'http',

host: 'geo.iproyal.com',

port: 12321,

auth: {

username: 'cool username',

password: 'cool password'

}

}

Author

Vilius Dumcius

Product Owner

With six years of programming experience, Vilius specializes in full-stack web development with PHP (Laravel), MySQL, Docker, Vue.js, and Typescript. Managing a skilled team at IPRoyal for years, he excels in overseeing diverse web projects and custom solutions. Vilius plays a critical role in managing proxy-related tasks for the company, serving as the lead programmer involved in every aspect of the business. Outside of his professional duties, Vilius channels his passion for personal and professional growth, balancing his tech expertise with a commitment to continuous improvement.

Learn more about Vilius Dumcius