How to Scrape Reddit With Python: A Comprehensive Guide

Justas Vitaitis

Last updated -

In This Article

Previously, you could collect data through Reddit API for a low cost. However, since 2023, the fees have changed, making the entire process extremely expensive . Reddit scraping has become the cheaper option, making it an attractive choice for anyone with some development skills.

Due to the changes, we won’t be covering Reddit API in-depth; instead, we’ll mostly focus on building your own scraper to do the same thing. Additionally, while building a Reddit app (a prerequisite for Reddit API data collection) is fun, the skills aren’t as transferable and useful as learning web scraping.

Web Scraping and Reddit

Web scraping is usually used to collect public web data at scale. Automated bots visit URLs and download the HTML content, which is then parsed to make the information more understandable.

While numerical data (such as pricing) is the most common use case, textual data has a lot of value as well. For example, it has been shown that there’s a correlation between Twitter post sentiment and stock market performance .

Reddit functions closer to Twitter than any pricing data. There are thousands of comments and posts on nearly every imaginable topic. Companies often use scraping to collect that data and then analyze it. A common potential use case is brand image monitoring.

It should be noted, however, that the legality of scraping isn’t black and white. While publicly available data (that is, one that is not under a login screen or otherwise protected) is generally considered scrapable, copyright and personal data protection legislation still holds.

We highly recommend consulting with a legal professional to understand whether your scraping project is within the bounds of the law.

Understanding Reddit’s Structure

Reddit functions similarly to a web forum—it has a homepage, subreddits based on interests, topics, niches, or anything else (akin to subforums), posts, and comments. Users can create a subreddit for free on any imaginable topic or niche.

Any user, barring specific conditions, can post anything on a subreddit. Any user can also leave comments within these posts. Posts may include embedded videos, links, images, or text, while comments are restricted to text and small images.

So, a usual browsing experience would be visiting the Reddit homepage and then heading over to a subreddit of interest. A user would then review all posts under that subreddit and potentially leave a comment on anything they find valuable to participate in.

Additionally, the visibility of posts and comments is decided by upvotes and downvotes. Posts and comments with more of the former get increased visibility, while downvoted posts and comments get pushed down in the feed quicker.

The Reddit API used to be an extremely easy way to extract data from subreddits, posts, and comments. Unfortunately, the new costs (1,000 calls for $0.24) quickly make the endeavor expensive as there are millions of comments and posts all over Reddit.

Scraping, barring the procurement of residential proxies , can be done for free as long as you have the required skills.

Scraping Reddit Data

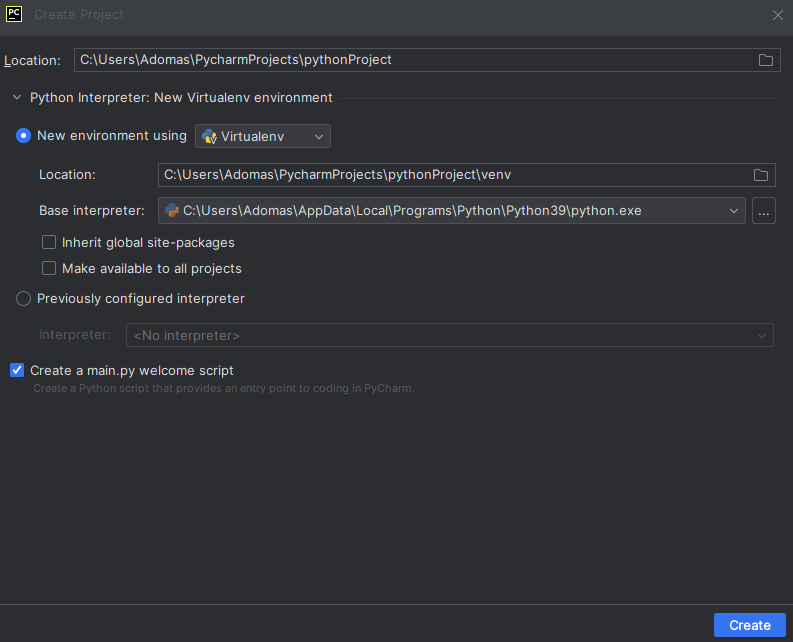

For any Python development work, you’ll need an IDE, which will make coding, debugging, and executing a lot easier. PyCharm Community Edition is a completely free IDE that gives you enough features for most projects.

Once you have PyCharm installed, you can start working on your project. Open up the application, click “ Start a new project ”, and give it a name.

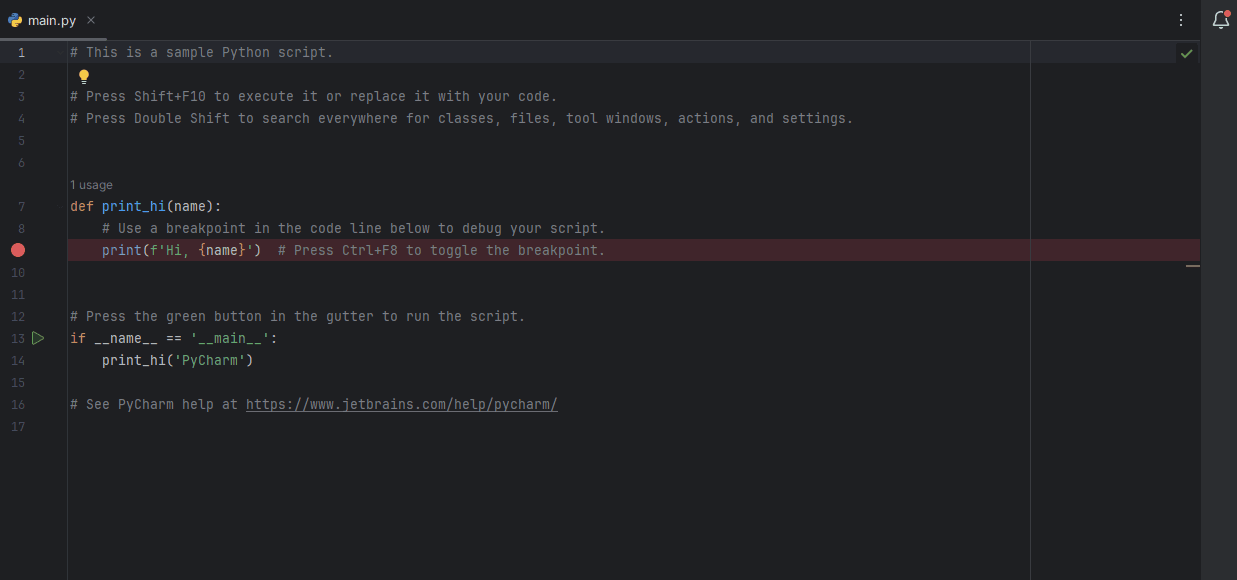

Click on “ Create ” to create a project folder with some files. Open up “ main.py ” if it doesn’t open by default.

We’ll need to install a few libraries to get started with Reddit scraping. Open up the terminal and type:

pip install beautifulsoup4 requests seleniumRequests will allow you to send and receive HTTP messages, while BeautifulSoup is used to easily parse HTML data. Requests, however, will only be used for demonstration purposes as Reddit performs a lot of JavaScript loading. Selenium, a browser automation library, will be the primary way to extract data.

Sending a Request

Start by importing both libraries:

import requests

from bs4 import BeautifulSoupTo send a request, we’re best off defining a new function that takes “url” and “proxies” as its variables:

def fetch_url_content(url, proxies=None):

"""

Fetches content for a given URL using optional proxies.

:param url: URL to fetch content from.

:return: HTML content of the page or None if an error occurs.

"""We can then move on to adding HTTP headers and sending the connection request. Headers are important as default requests library headers may get blocked quickly or even outright.

headers = {'User-Agent': 'Mozilla/5.0'}

try:

response = requests.get(url, headers=headers, proxies=proxies, timeout=10)

if response.status_code == 200:

return response.textOur Python script will attempt to send a connection to a URL, using the headers and proxies outlined above with a custom timeout duration of 10 seconds. If it is successful (HTTP response code is 200), it will return the response text (HTML file).

Everything doesn’t always go as planned, so we should implement minor error handling:

else:

print(f"Failed to fetch {url}: Status code {response.status_code}")

return None

except Exception as e:

print(f"An error occurred while fetching {url}: {e}")

return NoneIf the response status code is not 200, our program will output that it failed to get a proper response and output both the URL and response code. It will, as expected, return the value “None” instead of the response text.

In the case of a different error, our exception block will print a general error with the URL and the exception object, returning “None” once again. Our exception response allows the program to continue running even if something unexpected happens while providing slight debugging benefits.

Here’s how the request function should look like:

def fetch_url_content(url, proxies=None):

"""

Fetches content for a given URL using optional proxies.

:param url: URL to fetch content from.

:return: HTML content of the page or None if an error occurs.

"""

headers = {'User-Agent': 'Mozilla/5.0'}

try:

response = requests.get(url, headers=headers, proxies=proxies, timeout=10)

if response.status_code == 200:

return response.text

else:

print(f"Failed to fetch {url}: Status code {response.status_code}")

return None

except Exception as e:

print(f"An error occurred while fetching {url}: {e}")

return NoneParsing the Data

If our code retrieves the entire HTML file from a subreddit, you can glean a lot of potential information from it. The usual centers of attention are the subreddit description, user information, posts, and comments.

We’ll start with parsing the subreddit title, description, and subscriber counts.

Parsing Subreddit Data

def scrape_subreddit_metadata(html_content):

"""

Scrapes subreddit metadata: description, subscriber count, and title.

:param html_content: HTML content of the subreddit page.

:return: A dictionary with metadata.

"""

soup = BeautifulSoup(html_content, 'html.parser')Our new function will serve as a modular way to scrape subreddit data. We start by defining a “soup” object that takes the response retrieved from the URL. We’ll then move on to extracting the data:

# Find the <shreddit-subreddit-header> element

subreddit_header = soup.find('shreddit-subreddit-header')

if subreddit_header:

# Extract attributes from the <shreddit-subreddit-header> element

description = subreddit_header.get('description')

subscriber_count = subreddit_header.get('subscribers')

title = subreddit_header.get('display-name')

return {

'Description': description,

'Subscriber_count': subscriber_count,

'Title': title

}

else:

return {

'description': 'Not found',

'subscriber_count': 'Not found',

'title': 'Not found'

}From our file, we’ll find three key content pieces - the description, the subscriber count, and the title. All of that data is stored in the ‘shreddit-subreddit-header’ element. Once it’s found, we’ll go through the attributes that store each key data point.

We then use a dictionary to return all three values. If no subreddit header is found, “not found” is returned instead.

Scraping Reddit Post Titles

To scrape posts, we’ll need to find their titles in the HTML source file and perform actions similar to those with metadata. We should note, however, that a single page will have more than one post available, so a loop will be required.

Additionally, post titles are stored dynamically in JavaScript by using a technique called infinite scrolling. The initial page will only have a few titles, so we’ll need a new library to tackle JavaScript—Selenium. Let’s import it:

import requests

from bs4 import BeautifulSoup

from selenium import webdriver

from selenium.webdriver.common.keys import Keys

from selenium.webdriver.common.by import By

import timeThe default “time” library is also used as if you want to get a lot of Reddit data, you need to keep scrolling. Time will be used to allow the page to load before continuing to scroll down.

Now, let’s write the Selenium scraping function:

def scrape_post_titles(subreddit_url):

"""

Scrapes titles of posts from the subreddit using Selenium to handle dynamic content loading.

:param subreddit_url: URL of the subreddit page.

:return: A list of post titles.

"""

driver = webdriver.Chrome()

# Open the subreddit page

driver.get(subreddit_url)We’ll use the Chrome webdriver and use the “driver” object to call it when necessary. Note that in newer versions of Selenium you no longer need to add the path to your webdriver or download it separately—they are now included in the package.

Our next line will take a URL as an argument and use the “driver.get” method to visit the page.

# Scroll down to load more posts. Adjust the range and sleep duration as needed.

body = driver.find_element(by=By.TAG_NAME, value='body')

for _ in range(10): # Number of Page Downs to simulate

body.send_keys(Keys.PAGE_DOWN)

time.sleep(1) # Sleep to allow content to load; adjust as needed

# Now, scrape the post titles

post_titles = []

post_links = driver.find_elements(By.XPATH, '//a[@slot="full-post-link"]//faceplate-screen-reader-content')

for link in post_links:

post_titles.append(link.text)

# Close the WebDriver

driver.quit()

return post_titlesOnce our webdriver visits the website, it finds and focuses on the ‘body’ element, which is most of the webpage. After that, we create a loop to send the “PAGE_DOWN” key (which scrolls down the page in increments).

You can edit the range integer to increase or decrease the amount of key presses. Additionally, we default to a sleep of 1 second, but if that’s not enough to consistently load new content, you should increase it.

Once our webdriver has completed the task of scrolling down, we create a list object, “post_titles”, in which we will store all of the titles. We then use find_elements and XPATH to find the post titles in the HTML file.

Finally, we initiate another loop that goes through all of the acquired post titles and adds them to the list we defined previously.

After that, the Selenium webdriver is closed, and the list is returned.

Take note that we’re not currently using headless browsing. It’s usually wise to see if everything functions properly by seeing a regular version of the browser and then move on to headless versions.

Let’s run a quick test. Include the imports, the post title scraping function, a call to it and print out the results. Here’s how the code should look like (we’re omitting other functions for brevity):

import requests

from bs4 import BeautifulSoup

from selenium import webdriver

from selenium.webdriver.common.keys import Keys

from selenium.webdriver.common.by import By

import time

def scrape_post_titles(subreddit_url):

"""

Scrapes titles of posts from the subreddit using Selenium to handle dynamic content loading.

:param subreddit_url: URL of the subreddit page.

:return: A list of post titles.

"""

# Setup WebDriver

driver = webdriver.Chrome()

# Open the subreddit page

driver.get(subreddit_url)

# Scroll down to load more posts. Adjust the range and sleep duration as needed.

body = driver.find_element(by=By.TAG_NAME, value='body')

for _ in range(10): # Number of Page Downs to simulate

body.send_keys(Keys.PAGE_DOWN)

time.sleep(1) # Sleep to allow content to load; adjust as needed

# Now, scrape the post titles

post_titles = []

post_links = driver.find_elements(By.XPATH, '//a[@slot="full-post-link"]//faceplate-screen-reader-content')

for link in post_links:

post_titles.append(link.text)

# Close the WebDriver

driver.quit()

return post_titles

url = 'https://www.reddit.com/r/Python/'

post_titles = scrape_post_titles(url)

print(f"Post titles: {post_titles}")Run the code by clicking the green arrow button at the top of the screen. If you did everything correctly, you should receive a short message with all the post titles.

Assuming the output is correct, you can add a headless argument to make the browser run everything in the background. We’ll need to make a few small changes when initializing the Chrome web driver:

# Setup WebDriver

options = webdriver.ChromeOptions()

options.add_argument("--headless=new")

options.add_argument('user-agent=Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3')

driver = webdriver.Chrome(options=options)Two new options are added: the first initializes headless mode, which makes the browser invisible to you. Our second option adds a user agent to prevent Reddit from noticing that we’re attempting to access content with a headless browser. Otherwise the website is likely not to respond as expected.

Scraping Reddit’s Comment Section

Comments are the most difficult part to scrape, primarily because we also need to collect all of the URLs of each post, scroll down to make comments visible, and then parse all of that.

Luckily, we already have a function that does most of the first part. We only need to tweak it to also collect URLs. Let’s start with everything that remains the same except for the function name:

def scrape_post_titles_and_urls(subreddit_url):

"""

Scrapes titles and URLs of posts from the subreddit using Selenium to handle dynamic content loading.

Returns two lists: one for post titles and another for post URLs.

:param subreddit_url: URL of the subreddit page.

:return: A tuple containing two lists, the first with post titles and the second with post URLs.

"""

# Setup WebDriver

options = webdriver.ChromeOptions()

options.add_argument("--headless=new")

options.add_argument('user-agent=Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3')

driver = webdriver.Chrome(options=options)

# Open the subreddit page

driver.get(subreddit_url)

# Scroll down to load more posts. Adjust the range and sleep duration as needed.

body = driver.find_element(by=By.TAG_NAME, value='body')

for _ in range(10): # Number of Page Downs to simulate

body.send_keys(Keys.PAGE_DOWN)

time.sleep(1) # Sleep to allow content to load; adjust as neededNow, we need to make slight modifications that will separate post titles and URLs. Unfortunately, they’re stored in nested elements, so it’ll be a little harder than adding a few lines:

# Now, scrape the post titles and URLs

post_titles = []

post_urls = []

post_elements = driver.find_elements(By.XPATH, '//a[@slot="full-post-link"]')

for post_element in post_elements:

# Extracting URL

post_url = post_element.get_attribute('href')

# Adjust the URL if it's relative

if post_url.startswith("/"):

post_url = "https://www.reddit.com" + post_url

post_urls.append(post_url)

# Extracting Title

title_element = post_element.find_element(By.TAG_NAME, 'faceplate-screen-reader-content')

post_titles.append(title_element.text)

# Close the WebDriver

driver.quit()

return post_titles, post_urlsWe create two dictionaries, one for titles and one for URLs. After that, the “find_elements” argument selects the parent XPATH element. Once that’s completed, we start a loop that goes through all elements that have the attribute ‘href’, which all will be URLs.

In case the URL is relative, we recreate a full URL by using an if statement. After all of these steps, the full URL is added to the “post_urls” list.

To get the titles, we continue the loop, find another element where the titles are stored, and append the title text to the “post_titles” list. Once the loop completes, the browser closes and returns both dictionaries.

If we need post titles, we can reuse the code we outlined previously. For comments, we’ll need a new function. It should be noted that top-level comments may have so many replies (second-level comments) that a user needs to click “ Load more replies ” to get more of them.

We’ll be only creating a function that extracts all visible top-level comments without any additional interactions:

def scrape_comments_from_post_urls(post_urls):

"""

Visits each post URL, scrolls down to load comments, and parses the HTML to extract comments.

:param post_urls: A list of URLs for the posts.

:return: A dictionary where each key is a post URL and the value is a list of comments for that post.

"""

comments_data = {}

options = webdriver.ChromeOptions()

options.add_argument("--headless=new")

options.add_argument('user-agent=Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3')

driver = webdriver.Chrome(options=options)As per usual, we start by defining a new function with all of the same driver options as previously. We, however, create a new dictionary “comments_data” where we will map URLs to comments.

for url in post_urls:

driver.get(url)

time.sleep(2) # Wait for initial page load

# Scroll to the end of the page to ensure comments are loaded. Adjust as necessary.

last_height = driver.execute_script("return document.body.scrollHeight")

while True:

driver.execute_script("window.scrollTo(0, document.body.scrollHeight);")

time.sleep(2) # Wait to load page

new_height = driver.execute_script("return document.body.scrollHeight")

if new_height == last_height:

break

last_height = new_heightWe start our next section with a loop that goes through our list of URLs. Remember to always first call the scraping function that collects post titles and URLs in order to avoid errors.

Our driver will then visit the page and use JavaScript to calculate the total height of the page and assign it to the object “last_height”. Since the comment section does not have infinite scrolling, we can always find the bottom of the page rather easily by comparing values.

We then start an infinite loop with “while True”, within which we will scroll to the bottom of the page, wait a few seconds, and compare the initially acquired document height with the current height.

If there was new content loaded at any point, the “new_height” value would be different from “last_height”, which will restart the loop. If these two values are equal (no new content has been loaded), the loop will break.

# Extract the page source and parse it

soup = BeautifulSoup(driver.page_source, 'html.parser')

comments = []

for comment in soup.select('shreddit-comment'):

# Assuming the actual comment text is within a div with id attribute formatted as '<thingid>-comment-rtjson-content'

comment_text = comment.select_one('div[id$="-comment-rtjson-content"]')

if comment_text:

comments.append(comment_text.get_text(strip=True))

comments_data[url] = comments

driver.quit()

return comments_data

last_height = new_height

# Example usage

url = 'https://www.reddit.com/r/Python/'

post_titles, post_urls = scrape_post_titles_and_urls(url)

comments_data = scrape_comments_from_post_urls(post_urls)

for url, comments in comments_data.items():

print(f"Comments for {url}:")

for comment in comments:

print(comment)We’ll then take the page source of the entire file (using the object “soup”) and create a list for comments. Since there’ll likely be many comments, we’ll again go through a loop to find them all and append them to the list. Finally, we move all the comments to the dictionary, where the key is the URL and the values are the comments.

For testing purposes, we can use a simple print function. We first add our URL (the Python subreddit in our example), then retrieve the titles and URLs. We use the URLs to run the comment function and output them in a printed state.

Here’s the full code so far. Note that the DOM structure, class naming, and many other features may change over time, so you may need to make modifications:

import requests

from bs4 import BeautifulSoup

from selenium import webdriver

from selenium.webdriver.common.keys import Keys

from selenium.webdriver.common.by import By

import time

def fetch_url_content(url, proxies=None):

"""

Fetches content for a given URL using optional proxies.

:param url: URL to fetch content from.

:param proxies: A dictionary of proxies to use for the request.

:return: HTML content of the page or None if an error occurs.

"""

headers = {'User-Agent': 'Mozilla/5.0'}

try:

response = requests.get(url, headers=headers, proxies=proxies, timeout=10)

if response.status_code == 200:

return response.text

else:

print(f"Failed to fetch {url}: Status code {response.status_code}")

return None

except Exception as e:

print(f"An error occurred while fetching {url}: {e}")

return None

def scrape_subreddit_metadata(html_content):

"""

Scrapes subreddit metadata: description, subscriber count, and title.

:param html_content: HTML content of the subreddit page.

:return: A dictionary with metadata.

"""

soup = BeautifulSoup(html_content, 'html.parser')

# Find the <shreddit-subreddit-header> element

subreddit_header = soup.find('shreddit-subreddit-header')

if subreddit_header:

# Extract attributes from the <shreddit-subreddit-header> element

description = subreddit_header.get('description')

subscriber_count = subreddit_header.get('subscribers')

title = subreddit_header.get('display-name')

return {

'description': description,

'subscriber_count': subscriber_count,

'title': title

}

else:

return {

'description': 'Not found',

'subscriber_count': 'Not found',

'title': 'Not found'

}

def scrape_post_titles_and_urls(subreddit_url):

"""

Scrapes titles and URLs of posts from the subreddit using Selenium to handle dynamic content loading.

Returns two lists: one for post titles and another for post URLs.

:param subreddit_url: URL of the subreddit page.

:return: A tuple containing two lists, the first with post titles and the second with post URLs.

"""

# Setup WebDriver

options = webdriver.ChromeOptions()

options.add_argument("--headless")

options.add_argument('user-agent=Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3')

driver = webdriver.Chrome(options=options)

# Open the subreddit page

driver.get(subreddit_url)

# Scroll down to load more posts. Adjust the range and sleep duration as needed.

body = driver.find_element(by=By.TAG_NAME, value='body')

for _ in range(10): # Number of Page Downs to simulate

body.send_keys(Keys.PAGE_DOWN)

time.sleep(1) # Sleep to allow content to load; adjust as needed

# Now, scrape the post titles and URLs

post_titles = []

post_urls = []

post_elements = driver.find_elements(By.XPATH, '//a[@slot="full-post-link"]')

for post_element in post_elements:

# Extracting URL

post_url = post_element.get_attribute('href')

# Adjust the URL if it's relative

if post_url.startswith("/"):

post_url = "https://www.reddit.com" + post_url

post_urls.append(post_url)

# Extracting Title

title_element = post_element.find_element(By.TAG_NAME, 'faceplate-screen-reader-content')

post_titles.append(title_element.text)

# Close the WebDriver

driver.quit()

return post_titles, post_urls

def scrape_comments_from_post_urls(post_urls):

"""

Visits each post URL, scrolls down to load comments, and parses the HTML to extract comments.

:param post_urls: A list of URLs for the posts.

:return: A dictionary where each key is a post URL and the value is a list of comments for that post.

"""

comments_data = {}

options = webdriver.ChromeOptions()

options.add_argument("--headless=new")

options.add_argument('user-agent=Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3')

driver = webdriver.Chrome(options=options)

for url in post_urls:

driver.get(url)

time.sleep(2) # Wait for initial page load

# Scroll to the end of the page to ensure comments are loaded. Adjust as necessary.

last_height = driver.execute_script("return document.body.scrollHeight")

while True:

driver.execute_script("window.scrollTo(0, document.body.scrollHeight);")

time.sleep(2) # Wait to load page

new_height = driver.execute_script("return document.body.scrollHeight")

if new_height == last_height:

break

last_height = new_height

# Extract the page source and parse it

soup = BeautifulSoup(driver.page_source, 'html.parser')

comments = []

for comment in soup.select('shreddit-comment'):

# Assuming the actual comment text is within a div with id attribute formatted as '<thingid>-comment-rtjson-content'

comment_text = comment.select_one('div[id$="-comment-rtjson-content"]')

if comment_text:

comments.append(comment_text.get_text(strip=True))

comments_data[url] = comments

driver.quit()

return comments_data

# Example usage

url = 'https://www.reddit.com/r/Python/'

post_titles, post_urls = scrape_post_titles_and_urls(url)

comments_data = scrape_comments_from_post_urls(post_urls)

for url, comments in comments_data.items():

print(f"Comments for {url}:")

for comment in comments:

print(comment)

Making Improvements to Our Scraper

Exporting to XSLX or CSV Files

Printing out every specific post is great for debugging but not that useful for data analysis. It’s often best to output everything into a CSV file. For that, we’ll need to install a new library and make some changes:

pip install pandas openpyxlPandas is a library that lets users easily create data frames, which can be converted into various popular file formats such as CSV, JSON, and XLSX. Openpyxl makes it easier to read and write to files like XLSX.

We’ll need to adjust every function to output data frames instead of returning regular Python objects. But first, we need to import our new libraries:

import pandas as pd

import openpyxlNow we’ll adjust the metadata function. Our changes begin after “ title = subreddit_header.get(‘display-name’)”:

# Create a DataFrame

df_metadata = pd.DataFrame({

'description': [description],

'subscriber_count': [subscriber_count],

'title': [title]

})

else:

# Create a DataFrame with 'Not found' values if the subreddit header is not found

df_metadata = pd.DataFrame({

'description': ['Not found'],

'subscriber_count': ['Not found'],

'title': ['Not found']

})

return df_metadataWe are now creating a dataframe object that stores the same data in it and returns it instead of the dictionary.

Adjustments also need to be made to our post title and URL function. Our changes begin after “driver.quit()”:

# Create separate DataFrames for titles and URLs

df_post_titles = pd.DataFrame({

'Title': post_titles

})

df_post_urls = pd.DataFrame({

'URL': post_urls

})

return post_urls, df_post_titles, df_post_urlsAs previously, we return the new dataframes. It’s recommended to also include the “post_urls” list separately, as it’ll make it easier to use it for the comments function.

For the comment function, we’ll need to make several changes. First, we’ll have to recreate our dictionary with new key value pairs so it’s easier to convert it to a dataframe. Then, we’ll have to append the keys and values as previously. However, at the end, we’ll create a dataframe which we will return instead of the dictionary.

comments_data = {'Post URL': [], 'Comment': []}

[unchanged code omitted]

# Extract the page source and parse it

soup = BeautifulSoup(driver.page_source, 'html.parser')

for comment in soup.select('shreddit-comment'):

# Assuming the actual comment text is within a div with id attribute formatted as '<thingid>-comment-rtjson-content'

comment_text = comment.select_one('div[id$="-comment-rtjson-content"]')

if comment_text:

comments_data['Post URL'].append(url)

comments_data['Comment'].append(comment_text.get_text(strip=True))

driver.quit()

# Create a DataFrame from the aggregated comments data

df_comments = pd.DataFrame(comments_data)

return df_commentsFinally, we can then export all data into an Excel file:

# Example usage

url = 'https://www.reddit.com/r/Python/'

response_html = fetch_url_content(url)

df_metadata = scrape_subreddit_metadata(response_html)

post_urls, df_post_titles, df_post_urls = scrape_post_titles_and_urls(url)

df_comments = scrape_comments_from_post_urls(post_urls)

# Initialize ExcelWriter

with pd.ExcelWriter('reddit_data.xlsx', engine='openpyxl') as writer:

df_metadata.to_excel(writer, sheet_name='Subreddit Metadata', index=False)

# Extract subreddit name from the DataFrame

# Assuming 'Title' contains the subreddit name and there's at least one row in df_metadata

if not df_metadata.empty and 'Title' in df_metadata.columns:

subreddit_name = df_metadata['Title'].iloc[0]

else:

subreddit_name = 'Unknown' # Default value in case of missing or empty DataFrame

# Use the extracted subreddit name for naming the sheets

df_post_titles.to_excel(writer, sheet_name=f'Subreddit {subreddit_name} Posts', index=False)

df_comments.to_excel(writer, sheet_name=f'Subreddit {subreddit_name} Comments', index=False)We’re using a sample subreddit - Python - for our URL. We first call the “fetch_url_content” function to get the source code and then collect everything in turn— subreddit metadata, post URLs in two formats, post titles, and comments.

Since we want to do some formatting (that is, export our dataframes into different sheets), we’ll be using openpyxl. As such, we start the ExcelWriter function, assign our file a name, and set the engine to openpyxl.

We then go through each sheet (metadata, titles, and comments) individually and dump the data to each sheet. Note that we’re also using the title from the “df_metadata” dataframe to create a name for the sheets.

Integrating Proxies

Running a few scrapes for a specific post or several of them won’t cause your IP to get banned. If you attempt to run the same code for a large variety of subreddits, getting banned is almost inevitable. You’ll need rotating residential proxies or Reddit proxies to avoid bans.

For our HTML content file, we use the requests library, which has decent proxy support. All you need to do is create a dictionary file outside of your functions with your proxy endpoint:

proxy_endpoint = {

'http': 'http://username:password@proxyserver:port',

'https': 'https://username:password@proxyserver:port'

}You need to simply switch the function argument from “proxies=none” to “proxies=proxy_endpoint”.

def fetch_url_content(url, proxies=proxy_endpoint):For Selenium, things are a little bit more complicated. It’s best if you can whitelist IP addresses, as authentication is the difficult part to handle. In any case, you’ll need to modify the Selenium functions to include:

from selenium.webdriver.common.proxy import Proxy, ProxyType ##required for proxy usage

def scrape_post_titles_and_urls(subreddit_url, proxy=None):

options = webdriver.ChromeOptions()

options.add_argument("--headless")

options.add_argument('user-agent=Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3')

if proxy:

selenium_proxy = Proxy()

selenium_proxy.proxy_type = ProxyType.MANUAL

selenium_proxy.http_proxy = proxy

selenium_proxy.ssl_proxy = proxy

capabilities = webdriver.DesiredCapabilities.CHROME

selenium_proxy.add_to_capabilities(capabilities)

driver = webdriver.Chrome(options=options, desired_capabilities=capabilities)

else:

driver = webdriver.Chrome(options=options)

[further code unchanged]

def scrape_comments_from_post_urls(post_urls, proxy=None):

comments_data = {'Post URL': [], 'Comment': []}

options = webdriver.ChromeOptions()

options.add_argument("--headless=new")

options.add_argument('user-agent=Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3')

if proxy:

selenium_proxy = Proxy()

selenium_proxy.proxy_type = ProxyType.MANUAL

selenium_proxy.http_proxy = proxy

selenium_proxy.ssl_proxy = proxy

capabilities = webdriver.DesiredCapabilities.CHROME

selenium_proxy.add_to_capabilities(capabilities)

driver = webdriver.Chrome(options=options, desired_capabilities=capabilities)

else:

driver = webdriver.Chrome(options=options)

Now, if you have whitelisted your IP address, you can create a string object with the IP address and port combination (e.g., “127.0.0.1:8080”). If you can’t whitelist, use Selenium-wire to handle authentication.

Conclusion

For ease of use, we’re providing the entire code block in one go:

import requests

from bs4 import BeautifulSoup

from selenium import webdriver

from selenium.webdriver.common.keys import Keys

from selenium.webdriver.common.by import By

from selenium.webdriver.common.proxy import Proxy, ProxyType

import time

import pandas as pd

import openpyxl

proxy_endpoint = {

'http': 'http://username:password@proxyserver:port',

'https': 'https://username:password@proxyserver:port'

}

def fetch_url_content(url, proxies=None):

"""

Fetches content for a given URL using optional proxies.

:param url: URL to fetch content from.

:return: HTML content of the page or None if an error occurs.

"""

headers = {'User-Agent': 'Mozilla/5.0'}

try:

response = requests.get(url, headers=headers, proxies=proxies, timeout=10)

if response.status_code == 200:

return response.text

else:

print(f"Failed to fetch {url}: Status code {response.status_code}")

return None

except Exception as e:

print(f"An error occurred while fetching {url}: {e}")

return None

def scrape_subreddit_metadata(html_content):

"""

Scrapes subreddit metadata: description, subscriber count, and title.

:param html_content: HTML content of the subreddit page.

:return: A dictionary with metadata.

"""

soup = BeautifulSoup(html_content, 'html.parser')

# Find the <shreddit-subreddit-header> element

subreddit_header = soup.find('shreddit-subreddit-header')

if subreddit_header:

# Extract attributes from the <shreddit-subreddit-header> element

description = subreddit_header.get('description')

subscriber_count = subreddit_header.get('subscribers')

title = subreddit_header.get('display-name')

# Create a DataFrame

df_metadata = pd.DataFrame({

'Description': [description],

'Subscriber_count': [subscriber_count],

'Title': [title]

})

else:

# Create a DataFrame with 'Not found' values if the subreddit header is not found

df_metadata = pd.DataFrame({

'description': ['Not found'],

'subscriber_count': ['Not found'],

'title': ['Not found']

})

return df_metadata

def scrape_post_titles_and_urls(subreddit_url, proxy=None):

"""

Scrapes titles and URLs of posts from the subreddit using Selenium to handle dynamic content loading.

Returns two lists: one for post titles and another for post URLs.

:param subreddit_url: URL of the subreddit page.

:return: A tuple containing two lists, the first with post titles and the second with post URLs.

"""

# Setup WebDriver

options = webdriver.ChromeOptions()

options.add_argument("--headless")

options.add_argument('user-agent=Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3')

if proxy:

selenium_proxy = Proxy()

selenium_proxy.proxy_type = ProxyType.MANUAL

selenium_proxy.http_proxy = proxy

selenium_proxy.ssl_proxy = proxy

capabilities = webdriver.DesiredCapabilities.CHROME

selenium_proxy.add_to_capabilities(capabilities)

driver = webdriver.Chrome(options=options, desired_capabilities=capabilities)

else:

driver = webdriver.Chrome(options=options)

# Open the subreddit page

driver.get(subreddit_url)

# Scroll down to load more posts. Adjust the range and sleep duration as needed.

body = driver.find_element(by=By.TAG_NAME, value='body')

for _ in range(10): # Number of Page Downs to simulate

body.send_keys(Keys.PAGE_DOWN)

time.sleep(1) # Sleep to allow content to load; adjust as needed

# Now, scrape the post titles and URLs

post_titles = []

post_urls = []

post_elements = driver.find_elements(By.XPATH, '//a[@slot="full-post-link"]')

for post_element in post_elements:

# Extracting URL

post_url = post_element.get_attribute('href')

# Adjust the URL if it's relative

if post_url.startswith("/"):

post_url = "https://www.reddit.com" + post_url

post_urls.append(post_url)

# Extracting Title

title_element = post_element.find_element(By.TAG_NAME, 'faceplate-screen-reader-content')

post_titles.append(title_element.text)

# Close the WebDriver

driver.quit()

# Create separate DataFrames for titles and URLs

df_post_titles = pd.DataFrame({

'Title': post_titles

})

df_post_urls = pd.DataFrame({

'URL': post_urls

})

return post_urls, df_post_titles, df_post_urls

def scrape_comments_from_post_urls(post_urls, proxy=None):

"""

Visits each post URL, scrolls down to load comments, and parses the HTML to extract comments.

:param post_urls: A list of URLs for the posts.

:return: A dictionary where each key is a post URL and the value is a list of comments for that post.

"""

comments_data = {'Post URL': [], 'Comment': []}

options = webdriver.ChromeOptions()

options.add_argument("--headless=new")

options.add_argument('user-agent=Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3')

if proxy:

selenium_proxy = Proxy()

selenium_proxy.proxy_type = ProxyType.MANUAL

selenium_proxy.http_proxy = proxy

selenium_proxy.ssl_proxy = proxy

capabilities = webdriver.DesiredCapabilities.CHROME

selenium_proxy.add_to_capabilities(capabilities)

driver = webdriver.Chrome(options=options, desired_capabilities=capabilities)

else:

driver = webdriver.Chrome(options=options)

for url in post_urls:

driver.get(url)

time.sleep(2) # Wait for initial page load

# Scroll to the end of the page to ensure comments are loaded. Adjust as necessary.

last_height = driver.execute_script("return document.body.scrollHeight")

while True:

driver.execute_script("window.scrollTo(0, document.body.scrollHeight);")

time.sleep(2) # Wait to load page

new_height = driver.execute_script("return document.body.scrollHeight")

if new_height == last_height:

break

last_height = new_height

# Extract the page source and parse it

soup = BeautifulSoup(driver.page_source, 'html.parser')

for comment in soup.select('shreddit-comment'):

# Assuming the actual comment text is within a div with id attribute formatted as '<thingid>-comment-rtjson-content'

comment_text = comment.select_one('div[id$="-comment-rtjson-content"]')

if comment_text:

comments_data['Post URL'].append(url)

comments_data['Comment'].append(comment_text.get_text(strip=True))

driver.quit()

# Create a DataFrame from the aggregated comments data

df_comments = pd.DataFrame(comments_data)

return df_comments

# Example usage

url = 'https://www.reddit.com/r/Python/'

response_html = fetch_url_content(url)

df_metadata = scrape_subreddit_metadata(response_html)

post_urls, df_post_titles, df_post_urls = scrape_post_titles_and_urls(url)

df_comments = scrape_comments_from_post_urls(post_urls)

# Initialize ExcelWriter

with pd.ExcelWriter('reddit_data.xlsx', engine='openpyxl') as writer:

df_metadata.to_excel(writer, sheet_name='Subreddit Metadata', index=False)

# Extract subreddit name from the DataFrame

# Assuming 'Title' contains the subreddit name and there's at least one row in df_metadata

if not df_metadata.empty and 'Title' in df_metadata.columns:

subreddit_name = df_metadata['Title'].iloc[0]

else:

subreddit_name = 'Unknown' # Default value in case of missing or empty DataFrame

# Use the extracted subreddit name for naming the sheets

df_post_titles.to_excel(writer, sheet_name=f'Subreddit {subreddit_name} Posts', index=False)

df_comments.to_excel(writer, sheet_name=f'Subreddit {subreddit_name} Comments', index=False)

Author

Justas Vitaitis

Senior Software Engineer

Justas is a Senior Software Engineer with over a decade of proven expertise. He currently holds a crucial role in IPRoyal’s development team, regularly demonstrating his profound expertise in the Go programming language, contributing significantly to the company’s technological evolution. Justas is pivotal in maintaining our proxy network, serving as the authority on all aspects of proxies. Beyond coding, Justas is a passionate travel enthusiast and automotive aficionado, seamlessly blending his tech finesse with a passion for exploration.

Learn more about Justas Vitaitis