5 Data Preprocessing Techniques for Business Productivity

Ryan Yee

Last updated -

In This Article

Data is valuable, especially for businesses. But data is also abundant, and businesses amass data by the boatload. We have so much data that processing it all is becoming more of a burden than an advantage.

So, how can we make data work better for us? How can we turn masses of raw data into a usable tool for growth?

Using data preprocessing techniques is the answer. Read on to understand what data preprocessing is, how it can increase business productivity, and data preprocessing techniques that you can implement in your organization.

What Is Data Preprocessing?

Like any raw resource, raw data can be crude, impractical, and difficult to work with. Raw data needs to be refined before it can be input into a machine learning model and mined for usable information.

For instance, if a company wants to analyze its business call tracking capabilities, it can use data mining to reveal patterns and trends. But raw data is data collected straight from the source, comes in many disparate formats, and is often incomplete. Inputting messy, noisy, and incomplete data into a machine learning model will only create confusing outcomes.

That’s where data preprocessing comes in.

Data preprocessing cleans up raw data and preps it for input into a machine learning algorithm where it can be mined for actionable insights. It takes raw data and turns it into high-quality data.

What Makes High-quality Data?

High-quality data leads to high-quality results. Businesses need high-quality data to make good decisions, like creating new products and improving customer communication strategies .

Bad data can skew those decisions, creating negative business outcomes. But what makes data high-quality?

- Accuracy

False data inputs and data collection errors affect data accuracy.

- Completeness

A complete dataset contains all the information required to fit its purpose. Incomplete data means missing information, which leaves data mining algorithms without the complete picture.

- Consistency

Data is collected in numerous ways from numerous sources. This can create data inconsistencies. If an organization wants to data mine, that is, input data into a single system for analysis, that data needs to have consistent parameters.

- Timeliness

The data must be relevant for the time it’s used. Out-of-date or irrelevant data can skew the model.

- Believability

The data must be considered trustworthy by those who use it.

- Interpretability

The data must be in a format that is easy to interpret by both analysts and machines.

The point of preprocessing data is to achieve all of these high-quality data requisites.

5 Data Preprocessing Techniques for Business Productivity

Let’s look at the data preprocessing techniques that will improve the quality of your business’ data.

1. Assess

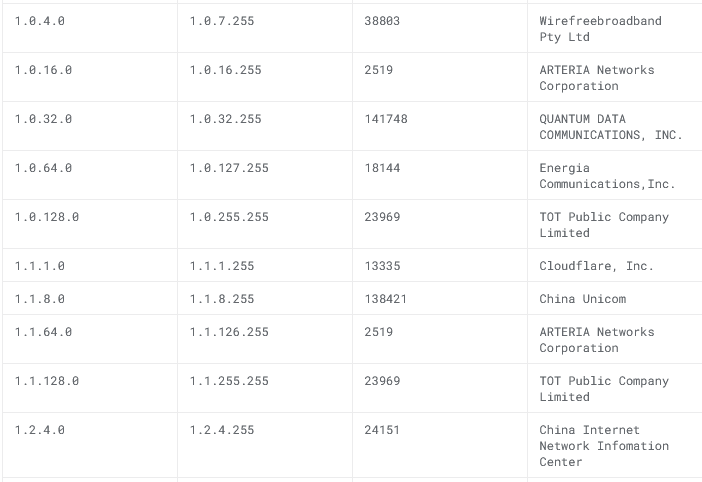

It’s essential to assess your dataset for usability. We can use one of Kaggle’s freely available datasets about global IP data.

Image sourced from kaggle.com

Image sourced from kaggle.com

Your typical dataset will look something like this. However, they also come in a variety of other formats, such as images, videos, and other kinds of text.

If your goal is finding out the number of clicks to your website from a domain sa location, for example, this dataset might work for you.

It’s important to assess the data to ensure it contains the right information and look for missing or inconsistent values that need cleaning up.

2. Cleaning

Cleaning data has different steps based on your data sets, the amount of data, and your particular goals.

The outcome is always the same, though: to reduce noise and create complete data sets that a machine learning model can process.

- Handling missing values

Raw data is often incomplete due to collection errors or respondents omitting information. If you’re developing Dropbox alternatives , for example, and your customer data is missing key information, like the amount of storage space they require, your machine learning model won’t be able to process the data.

Missing values can be replaced or removed.

- Clustering

When dealing with a lot of data, clustering can streamline the cleaning process. Clustering data involves grouping similar data into batches and looking for extreme outliers.

- Regression

Using linear or multiple regression charts, we can find relationships between data and locate and discard erroneous data.

- Binning

Batching data into equal-sized bins creates segments for easier assessment.

3. Reduction

Businesses collect masses of data from direct customer interaction, outside sources, or internal operations like a PBX virtual service. Dealing with the sheer volume can be time-consuming and resource-intensive. There’s storage, maintenance, and computational power to consider.

Machine learning models work hard to analyze data, and anything we can do to minimize the amount of data whilst still maintaining the quality is a positive.

To reduce data, you can use the following techniques:

- Sampling – use a sample of your data instead of the full set.

- Dimensionality reduction – reduce the number of features by either deleting redundant ones or combining multiple features into one.

- Feature selection – select and analyze only the most important features for your goals.

- Compression – compress the data into lossless formats for more efficient storage. Consider a data warehouse migration to cloud storage for better storage solutions.

- Discretization – convert continuous data into a finite set of intervals.

Data reduction saves time, energy, costs, and resources.

4. Integration

Different data sources use different formats, values, and naming conventions. When inputting data into a machine, the data needs consistency.

Data integration is the process of combining data from multiple sources into one unified view. This means fixing inconsistent values like measurement units, date formats, etc., to make sure the full dataset follows uniform rules.

5. Transformation

Transformation is the process of reformatting your data into values readable by a machine learning model. Some data transformation techniques are:

- Normalization – turn a wide array of variables into a consistent range. For example, a scale of 0-1.

- Generalization – turn low-level attributes into high-level attributes, such as using “young” and “senior” instead of age ranges.

- Smoothing – eliminate outliers from datasets to smooth data into more noticeable trends.

- Aggregation – present data in a summary format.

The point of transformation in data preprocessing is to create data that a machine learning algorithm can process more efficiently.

Using Data Preprocessing for Business Productivity

Businesses use data to make sound business decisions, innovate new products, grow, and improve internal processes.

However, data can be messy, erroneous, and difficult to interpret. Machine learning models can automate this process, but only if the inputted data is of high quality.

Data preprocessing is how we turn low-quality data into high-quality data. High-quality data produces high-quality results, giving businesses the information they need to make positive, productive, and future-proof decisions.

Author

Ryan Yee

Copywriter

Ryan is an award-winning copywriter, with over 20 years of experience. Throughout his career, he has collaborated with major US brands, emerging start-ups, and industry-leading tech enterprises. His copy and creative have contributed to the success of companies in the B2B marketing, education, and software sectors, helping them reach new customer bases and enjoy improved results.

Learn more about Ryan Yee